Effective RFPs Ignore Project Details and Cost

An RFP (Request for Proposals) is a technique companies use to select a vendor for a software project. Sometimes companies are required by policy to use an RFP process. Usually they believe the RFP is an effective way to select the best vendor.

I’ve seen quite a few really lousy RFPs. In fact, I think the typical RFP is a great example of a business “best practice” that is so poorly implemented that it has the opposite of its intended effect. Instead of simply complaining, I put together an outline for an RFP that I believe would actually help a client select the best vendor for their software project.

Most RFPs are focused on the software to be built. Mine focuses on the vendor and their team. I believe you should select the best vendor and team, then get working collaboratively with them on the project. If your project involves custom software, it will change as you work on it. Focusing potential vendors on project details in an RFP is asking them to speculate at the point of maximum ignorance. Not only is this unlikely to be useful in the long-run, it throws everyone off the more important selection criteria. Use the RFP to select the best vendor; collaborate with your vendor to build the best project.

Most RFPs have a heavily weighted section on project cost. The worst RFPs have little else, in fact. Given how little the vendor probably knows about the project at this point (remember, this is the point of maximum ignorance for everyone involved), cost is probably the single worst criteria you could use. In addition, saving even 50% on hourly rates or a fixed bid can’t always make up for a poorly selected vendor. If you don’t get the right product, or you get a buggy, unmaintainable mess, you might very well end up throwing it all out.

Focusing early on project cost makes you vulnerable to being told what you want to hear by unscrupulous vendors, and can actually result in substantially greater total cost. The lowest project cost comes from building the right software, the least amount of software possible, and software that you can economically maintain and extend. Knowing that your vendor has the right skills, an effective process, and a means of reliably hitting a given budget will achieve the lowest possible cost and the best possible outcome.

After selecting the best vendor, negotiate hourly rates or other costs to get the most possible software built for your budget. But remember, your RFP process has helped you select a highly qualified vendor with a proven track record of success who employs highly skilled people — don’t expect them to also be the lowest rate available. If your top choice vendor has competitive rates, then your RFP has done it’s job and now it’s time to start working with them to get the most you possibly can for your budget.

The rest of this post takes my RFP outline apart and explains each section and what you should look for in a vendor response.

I. Company

A. Year established

B. Brief creation story

C. Ownership

Your best vendor might be a brand new or young company, but if so, you better look very closely into the individuals you’ll be dealing with.

Knowing why and how a company was created can tell you a lot about their values, beliefs, and motivations. A vendor that can’t describe their own creation story probably isn’t going to be very creative on your project, either.

Look for owners that work actively in the company. Some people feel broad ownership (e.g. an ESOP) can improve outcomes, too. Owners that are remote or passive are generally a bad sign.

D. Employee experience retention rate

Getting the best possible people who already know how to work together on your project is the single most important thing you can do. Good people have lots of choices for where they work. Knowing that a vendor retains their staff, if they’re good, tells you a lot about the company.

Everybody seems to calculate this in a slightly different way. Provide your own worksheet so you can compare vendors. I like to calculate how many person-months of experience a company has retained over some time period.

E. Financial stats

- Revenue per employee, last 3 years

- Profitability compared to relevant benchmark (above, below, same)

- Working capital (cash, credit lines)

F. Client base

- Total clients served last 2 years

- Percent of revenue from largest customer, last 12 months

Not every company will be willing to share this information, but it’s a good sign about their views on transparency and collaboration with customers if they are.

You want to select a vendor that is financially stable. Part of being stable is being profitable. Being profitable also indicates a low rate of mistakes and project failures. If a vendor doesn’t know what the benchmarks for profitability are in their industry that’s a bad sign with respect to their business acumen.

Knowing that a vendor has cash or credit lines to smooth out fluctuations in demand is important to you. It means they won’t be tempted to take work they aren’t well-suited for.

A diverse base of clients is a good sign. Service companies with more than 20-25% of their revenue from a single vendor are taking a large business risk.

G. Culture

- Values, business practices, pictures, activities, etc

Culture is critical to retaining the best people and having a sustainable business. By far the best way to gauge culture is to visit the office of each vendor you’re considering.

This section lets a vendor tell you about the “soft” side of their company. It’s also interesting to see how a very open-ended question like this is handled. Software design and development are inherently open-ended and creative. A rigid, uncreative, strict or boring culture is unlikely to create a compelling new software product.

II. People

A. Total project staff

B. Publications, presentations, blogs, awards, etc (company or employees)

C. Professional development activities

D. Histogram of hours worked by developers per week

Companies that make employment commitments and invest in their employee’s professional development are more likely to have great people they know and you can count on for your project.

Look for travel and participation in conferences and workshops as well as local user groups.

In the service business, people who care, innovate. That often shows up in writings of some kind. Look for a generosity of spirit in sharing those lessons and ideas with the world.

In an industry that coined the term death march, software developers that work a consistent, sustainable pace are unfortunately rare. It’s also a really good sign that they have a repeatable, reliable, consistent pace of production, can estimate and plan well, and will be cheerful, creative and pleasant to work alongside.

A side bonus of asking for working hours data is that it tells you the vendor tracks their time. This is a good indicator of discipline, ability to estimate, and of analytic rigor.

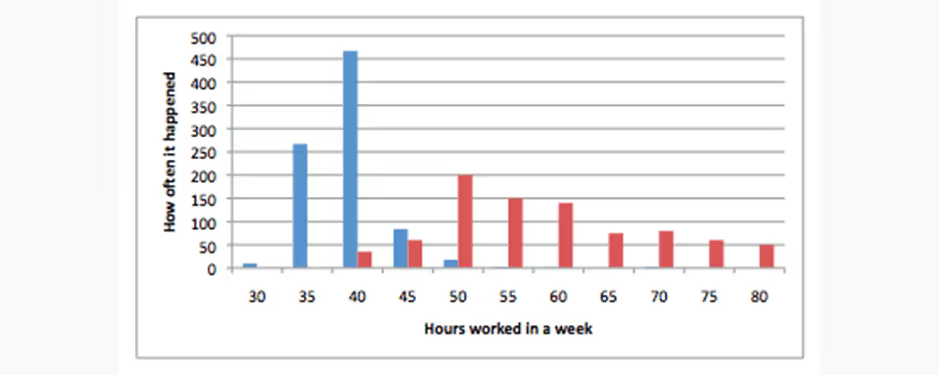

Consider the histogram below. The blue series shows developers that typically work 35-40 hours per week. There are very few weeks that the blue team has to work more than that, and they also seldom work less than that. The red team, on the other hand, has a much broader distribution of hours in a work week. They are as likely to work 80 hours as they are 45. The red team’s pace is not only less sustainable on a personal level, it’s a sign that their process or business practices lead to unpredictable work weeks.

III. Relevant Projects

A. Overview

- Client

- Context & business goals

- Responsibility on project

B. Size (person hours by staff function)

C. Duration (calendar dates)

D. Results and outcomes

Two case studies from projects similar to yours will tell you a lot more about a given vendor’s performance on your project than asking them to speculate about the details of your project itself.

Look for the vendor to identify the responsibilities of the customer. Being a customer is a demanding job. If they don’t include you in the project, watch out.

Look for the vendor to have understood the business goals and motivations of their client.

Bigger projects are more challenging than smaller projects. Ad-hoc project management and process is less likely to kill a project of one person than a project of ten people.

Look for results and outcomes that are both technical and customer-perspective: increased sales, decreased costs, improved usability, etc.

E. Sample work products

- Requirements

- Design

- Development

- Validation

- Project management

F. Client reference

Look to see artifacts from all phases and practices of a single project. This cohesive view will give you an idea of the vendor’s process from beginning to end. Informal artifacts such as whiteboard sketches, note cards, and spreadsheets aren’t necessarily bad. They might indicate a very efficient, integrated team and collaborative process without the expense of heavyweight formalism and strict handoffs between isolated specialists.

Expect to see examples of reports to customers that indicate actual progress based on measurable data, and not just vague statements of completion or accomplishment.

Validation in particular is interesting as it should be applied across all aspects of a project. For example, a collaborative session with project stakeholders that identifies and filters a set of features is a good way of validating understanding between the development team and the customer. User research is a good way of validating personas and hence product design decisions. Automated test suites validate source code.

With the case study background you receive, be sure to call the corresponding client references to get their take on the project.

IV. Failed Project

A. Overview

B. Size (person hours by staff function)

C. Duration (calendar dates)

D. Analysis

- Why considered a failure?

- What specifically went wrong? Why?

- What was the final outcome?

- Further work done for this customer?

- Actions taken, company impact?

Being willing to admit their mistakes is a good sign that a vendor is secure and has a strong track record.

Look for an even-handed and self-reflective root cause analysis—a failed project is usually due to both the vendor and the client. What lessons were learned? What changes were implemented as a result of these lessons? Was responsibility taken by the vendor?

V. Proposed Project

A. Summarize your understanding of the project

B. Risk analysis

Knowing what risks the vendor identifies tells you about how much they’ve thought about your project, how familiar they are with the technology and domain, and how experienced they are in general.

C. Team being proposed

- Size, roles

- Location of staff

- Employment status (W2, contract)

- Other responsibilities for each team member

- Education

- Professional experience

- Tenure with company

Pay close attention to how the vendor would staff your project, but don’t expect that the identical team from the vendor you select will be available if your RFP and selection process takes some time.

Look for people dedicated to your team and clean role distinction.

Give big points to a co-located team.

D. Approach

- Responsibilities

- Communication & coordination

- Requirements

- Estimating

- Tracking & reporting

- Delivery

This is the vendor’s chance to describe how they tackle projects. It’s intentionally vague, as you want to know that they have strong beliefs and practices on the non-technical aspects of projects.

Look for regular, incremental deliveries of tested software for you to evaluate.

Look for a strong pattern supported by appropriate tools for coordinating the team and the project stakeholders.

Look for strong practices to suss out requirements and build mutual understanding.

Expect to hear how change is handled. What about emerging requirements? Misunderstandings? Ambiguity?

What data are you given to manage your project? Can the vendor predict completion dates reliably? How is growth in scope and consequent budget and deadline impact managed?

How are estimates done? Is the project responsibly buffered for natural variation in task estimates?

E. Technical practices

- Design

- Development

- Quality

This section is similar to D but focused on technical practices.

Look for strong beliefs and specific practices. Your goal should be to select a vendor that knows what works based on experience and can tell you (in great, gory detail if desired) why they do things they way they do them.

Expect to be told about many different types of design (this question is intentionally broad): product design, personas, user research, information architecture, interaction design, visual design, design implementation.

Look for a description of software design principles and patterns. Expect to learn how developers validate their work (automated tests or test-driven development, for instance). Look for short iterations with regular delivery (and corresponding deployment automation) of tested software to the customer. Favor vendors that get “end-to-end” as soon as possible in a project and organize work in features meaningful to users and customers, not infrastructure.

What form of testing and validation are done? At what frequency? Is this done independently of the development team? How is progress tracked? How are results reported?

Which aspects of testing are automated, which are manual? This helps you understand how much regression testing is possible.

F. Support

- Future features and extensions

- Bugs & failures

- Rates and response times

- Hosting & monitoring

G. Preferred business relationship

Successful projects will require work in the future. How does the vendor handle this work? What practices are in place to bring a project back into an active state of development?

What about bugs that discovered in the field? Who pays to fix them? How responsive is the vendor to these bugs?

If you’re project is a web app, who is selecting a hosting vendor? Who is monitoring the web app?

Finally, let the vendor tell you what their preferred business relationship is. Each development process has a business relationship that is a natural match. You should expect to learn about how they invoice, what they charge, who owns the code being developed, who accepts and approves their work, what happens if you’re budget changes, what happens if you’re unhappy, etc.

VI. Additional References

A. Context, contact info

Be sure to call these and the references provided in the case studies in III. You obviously won’t be given references to unhappy customers, but with the right questions you can gauge a lot about whether the development process described is followed and what working with the vendor is like as a customer.

Questions you might consider asking include:

- Who did you communicate with on the team? (Look for regular, effective, direct communication with the developers, designers, and testers that did the work. An account manager intermediary can be problematic.)

- How often did you receive a build of the software that you could evaluate? (Weekly is totally possible but rare. Monthly is the longest you should tolerate.)

- How was your feedback on development builds handled? (It should be formally captured somewhere.)

- Were you able to steer development and influence features? (The team should be able to accommodate new ideas and refinement of features.)

- Were you given regular status reports that included the amount of work accomplished and the work remaining?

- Was the work accomplished measured or guessed at?

- How often did you hear things like “we can’t do that because you didn’t tell us earlier?”

- Was your project budget met? (Budgets aren’t always fixed, so this may be a complicated answer.)

- Which levers of control (cost, scope, time, quality) were you able to exercise on your project? (Scope is by far the most effective. Quality is the lever of death.)

- How did the team make estimates? Were those estimates accurate in aggregate? (Any single task estimate may be off, but in aggregate, over a larger body of work or longer period of time (say 2 weeks), estimates should be pretty accurate.)

- Were you actively encouraged to limit the scope of your application? (Clients usually err on the side of wanting to build too much. Building less and getting a product into customer’s hands earlier is often a better strategy.)

We’ll send our latest tips, learnings, and case studies from the Atomic braintrust on a monthly basis.